@Photo by Pixabay

@Photo by Pixabay

Problem

At summitto we use Prometheus for monitoring our infrastructure and Alertmanager for sending alerts. For reliability we wanted to make our monitoring system highly-available, with two Prometheis and Alertmanagers on different machines, which meant that the Alertmanagers needed to be in cluster mode to talk to each other and de-duplicate alerts. This cluster traffic needs to be secured, and since we don’t use any kind of service mesh like Consul or Istio, our initial thought was to use mutual TLS between the Alertmanagers, which we can do fairly easily with our reverse proxy[1], Haproxy. We didn’t really want to go to the trouble of setting up a service mesh or VPN just so these two containers could communicate securely.

However, this turned out to have two major problems:

-

Alertmanager currently doesn’t support presenting a client TLS certificate to its cluster peers. That means we needed some kind of forward proxy to add TLS to outgoing traffic, and Haproxy is not a forward proxy.

-

Alertmanager uses the memberlist library for maintaining the cluster, and that requires both UDP and TCP traffic. However, Haproxy does not support binding to UDP ports.

For context, our base setup was:

-

Two machines (machine1 and machine2) in the monitoring cluster, each meant to run Alertmanager in a Docker container.

-

One Haproxy instance on each machine, running as a systemd service (we don’t run Haproxy in a container because of Docker’s spotty support for IPv6).

Approach

To solve Problem 1, we created an Haproxy backend on each machine in our monitoring cluster that had a single server pointed to Haproxy on the other machine. Since an Alertmanager only ever sends cluster traffic to its one peer, this made it possible to add TLS and (ab)use Haproxy as a crude forward proxy. That means that the local Alertmanager needed to send outgoing traffic to Haproxy instead of directly to its peer, and then Haproxy would do its mutual TLS magic with the other Haproxy and all would be well.

This complicates things a bit though because Alertmanager is run in a container and Haproxy is not, and hence there is no address that Alertmanager could use to connect to Haproxy. The simplest way for the containerized Alertmanager to send traffic to Haproxy is through a Unix Domain Socket (UDS), which Haproxy has good support for. Alertmanager doesn’t support sending traffic directly to a UDS though, so we decided to use socat to forward traffic from a local port in the container to the mounted UDS that Haproxy is listening to.

To solve Problem 2, we opted to tunnel UDP traffic from an Alertmanager into a separate UDS, which Haproxy would forward to a separate port from the TCP traffic. This allowed us to differentiate between UDP and TCP traffic on the peers end.

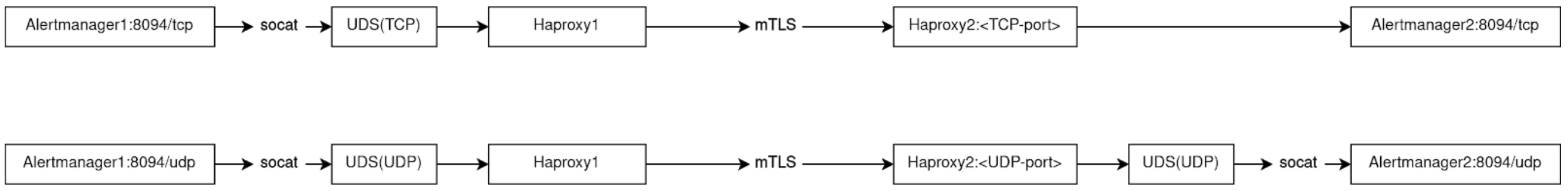

Too many words! Time for a diagram:

We now have three UDS’s on each machine. Two of them are created by Haproxy for outbound TCP/UDP traffic to the Alertmanager’s peer. The other one is for inbound UDP traffic from the peer and is created by socat running inside the Alertmanager container.

Implementation

Haproxy

The first thing we did was configure Haproxy to send outbound traffic:

# frontend for inbound traffic from this alertmanager's peer

frontend alertmanager

bind :<UDP-port> ssl strict-sni verify required ca-file /path/to/ca.pem crt /path/to/cert.pem

bind :<TCP-port> ssl strict-sni verify required ca-file /path/to/ca.pem crt /path/to/cert.pem

bind :::<UDP-port> ssl strict-sni verify required ca-file /path/to/ca.pem crt /path/to/cert.pem

bind :::<TCP-port> ssl strict-sni verify required ca-file /path/to/ca.pem crt /path/to/cert.pem

mode tcp

option tcplog

# add some ACLs here with ssl_c_s_dn(CN) if you want to restrict access to

# clients with a specific certificate

use_backend local-tcp if { dst_port eq <TCP-port> }

use_backend local-udp if { dst_port eq <UDP-port> }

# frontend for outbound TCP traffic to this alertmanager's peer

frontend peer-tcp

bind unix@/var/lib/haproxy/alertmanager/outbound-tcp.sock

mode tcp

option tcplog

default_backend peer-tcp

# fronend for outbound UDP traffic to this alertmanager's peer

frontend peer-udp

bind unix@/var/lib/haproxy/alertmanager/outbound-udp.sock

mode tcp

option tcplog

default_backend peer-udp

# backend for inbound TCP traffic to this alertmanager

backend local-tcp

mode tcp

# Because Haproxy doesn't run in a container and can't use Docker's built-in

# DNS server to resolve container names, we run a container with a fixed IP

# address that exposes Docker's DNS server with socat. This lets us resolve

# containers by their name, which is done here for the alertmanager.

server alertmanager alertmanager:<listen-port> check resolvers docker init-addr none

# backend for inbound UDP traffic to this alertmanager

backend local-udp

mode tcp

# haproxy does not like absolute paths here, for some reason

server alertmanager unix@alertmanager/inbound-udp.sock check

# backend for outbound TCP traffic to this alertmanager's peer

backend peer-tcp

mode tcp

server alertmanager <peer-domain>:<TCP-port> check check-sni <peer-domain> ssl verify required sni str(<peer-domain>) ca-file /path/to/ca.pem crt /path/to/cert.pem

# backend for outbound UDP traffic to this alertmanager's peer

backend peer-udp

mode tcp

server alertmanager <peer-domain>:<UDP-port> check check-sni <peer-domain> ssl verify required sni str(<peer-domain>) ca-file /path/to/ca.pem crt /path/to/cert.pem

The local-tcp and local-udp backends were dead at this point, but that could be left for later. So we started off testing UDP traffic since that was more complicated than handling TCP traffic. On one machine we ran socat to send data from stdin to the outbound UDP socket:

machine1 \# socat -

UNIX-CONNECT:/var/lib/haproxy/alertmanager/outbound-udp.sock

While on the other machine we had the opposite setup, forwarding traffic from the inbound UDP socket to stdout:

machine2 \# socat

UNIX-LISTEN:/var/lib/haproxy/alertmanager/inbound-udp.sock,fork,reuseaddr,user=haproxy,group=haproxy

-

The inbound socket needs to be owned by the haproxy user so Haproxy can write to it, and it also needs to be in the Haproxy chroot directory (/var/lib/haproxy, by default). At this point we were able to enter text in the terminal on machine1 and see it echoed on the terminal of machine2.

Alertmanager

The Alertmanager container needed to run three instances of socat, as well as the Alertmanager itself. That was done with a custom entrypoint:

#! /usr/bin/env sh

set -x

ALERTMANAGER_PID=''

# kill all child processes

cleanup() {

pkill -P $$

exit 0

}

# forward SIGHUP to Alertmanager so it reloads the configuration file

reload() {

if [[ ! -z "${ALERTMANAGER_PID}" ]]; then

kill -s SIGHUP ${ALERTMANAGER_PID}

fi

}

# wait for the given shell command to succeed

wait_for_success() {

while ! sh -c "$1"; do

sleep 1

done

}

# handle SIGTERM's from docker

trap 'cleanup' SIGTERM

# handle SIGHUP's for reloading the configuration

trap 'reload' SIGHUP

# outbound TCP traffic for peer

socat TCP4-LISTEN:8084,fork,reuseaddr UNIX-CONNECT:/sockets/outbound-tcp.sock &

# outbound UDP traffic for peer

socat UDP4-RECVFROM:8084,fork,reuseaddr UNIX-CONNECT:/sockets/outbound-udp.sock &

# inbound UDP traffic from peer, needs to be writable by the haproxy user

socat UNIX-LISTEN:/sockets/inbound-udp.sock,fork,reuseaddr,user=${HAPROXY_UID},group=${HAPROXY_GID} UDP4:localhost:9094 &

# wait for the socat's to bind to their ports/sockets

wait_for_success 'nc -zn localhost 8084'

wait_for_success 'nc -znu localhost 8084'

wait_for_success 'socat /dev/null UNIX-CONNECT:/sockets/inbound-udp.sock'

# ensure that our user can write to the data directory

chown -R nobody: ${DATA_DIR}

# start Alertmanager and save PID

sudo -u nobody alertmanager "$@" &

ALERTMANAGER_PID=$!

# need to `wait` in a loop so that SIGHUPs don't exit the whole script

while true; do

wait

done

The HAPROXY_UID, HAPROXY_GID, and DATA_DIR environment variables are passed at runtime, and the UDS directory is mounted at /sockets. All arguments to the entrypoint are forwarded to Alertmanager, which makes it quite simple to configure. We ran into a couple of issues while writing this:

-

Initially the script only trapped SIGTERMs, which meant that when we tried to reload Alertmanager with SIGHUP the script quit immediately without cleaning up the forked processes; this left /sockets/inbound-udp.sock on the filesystem, and would cause the socat process trying to bind to that socket to fail the next time the container was restarted (it is possible to get socat to bind to extant sockets, but we preferred to clean everything up on exit).

-

Because the script initially only trapped SIGTERM, the final wait was not in a while-loop; so when a SIGHUP was sent the signal would be forwarded properly to the Alertmanager process and then the script would exit. We thought that simply putting the wait in a while-loop would fix that, but the script still kept exiting on reloads. It turns out that “the reception of a signal for which a trap has been set will cause the wait built-in to return immediately with an exit status greater than 128” (source). And we had initially set -e, so of course as soon as wait exited with a non-zero code the script would exit. Lesson learned.

-

Using UDP4-LISTEN instead of UDP4-RECVFROM when tunneling UDP traffic over TCP with socat will break things in mysterious ways. See the man page for more details, but the specific problem we had was with large UDP packets being split into multiple TCP packets. When creating a silence in one Alertmanager we observed in the other Alertmanagers logs two error messages saying “got invalid checksum for UDP packet (xxxxxx, xxxxxx)”.

What was probably happening is that the silence message being gossiped between the Alertmanagers was too big for a TCP packet, so it got split into two and received by the other Alertmanager as two separate UDP packets, hence causing the two invalid checksums. This didn’t occur with the usual UDP gossip messages every ~1s because they’re small enough to fit in a single TCP packet.

Using UDP-RECVFROM fixes that because it ends up creating a new TCP connection for each UDP packet instead of re-using the same connection (which also explains why we previously only saw one connection to the UDP port in the Haproxy logs each time the Alertmanager was started).

Anyway, that was the entrypoint. Then we got to configuring Alertmanager properly. Remember that traffic to the peer needs to go to local ports that socat is bound to. This means that the Alertmanager’s listen port and advertised port cannot be the same. That’s why in the entrypoint, the socat processes for outbound traffic listen on port 8084, but inbound traffic is forwarded to port 9094. And so we ended up with the following –cluster.* arguments:

--cluster.listen-address 0.0.0.0:9094

--cluster.peer 127.0.0.1:8084

--cluster.advertise-address 127.0.0.1:8084

The first argument tells Alertmanager to listen for cluster traffic on port 9094 on all interfaces (in the container). Note that it’s important that it listens on all interfaces rather than just localhost, because otherwise we wouldn’t be able to forward inbound TCP traffic to it directly from Haproxy, which is done by the local-tcp backend.

The second argument tells Alertmanager that its peer is listening on local port 8084, though that’s actually just the UDP/TCP outbound socat processes that forward the traffic to Haproxy.

The third argument sets the advertise address, which is the address Alertmanager tells its peers to contact itself at. Note that the address here is actually referring to the local port 8084 of the peer container, not the advertising Alertmanager. This works because both Alertmanagers have exactly the same configuration, both will have traffic sent on their local port 8084 forwarded to their peer, so it’s safe to advertise a local address.

Conclusion

We set up an Alertmanager cluster that secures cluster traffic using mutual TLS provided by Haproxy, letting us avoid having to set up a VPN or service mesh. One thing that helped the process enormously was being able to test literally everything locally, in containers. We use Ansible for configuring our machines (Haproxy, etc) and deploying our services (mainly Docker containers), and Molecule for testing our roles. Having the ability to apply all this configuration and test locally made things far easier than they would have been if we had to use physical machines.

Even so, it still took a lot of time and effort, and we’re lucky that we only needed to have two Alertmanagers. This design does not scale very well because of all the weird proxying/tunneling that’s necessary, and we’d probably take another look at a service mesh/VPN if it was necessary to have three or more.